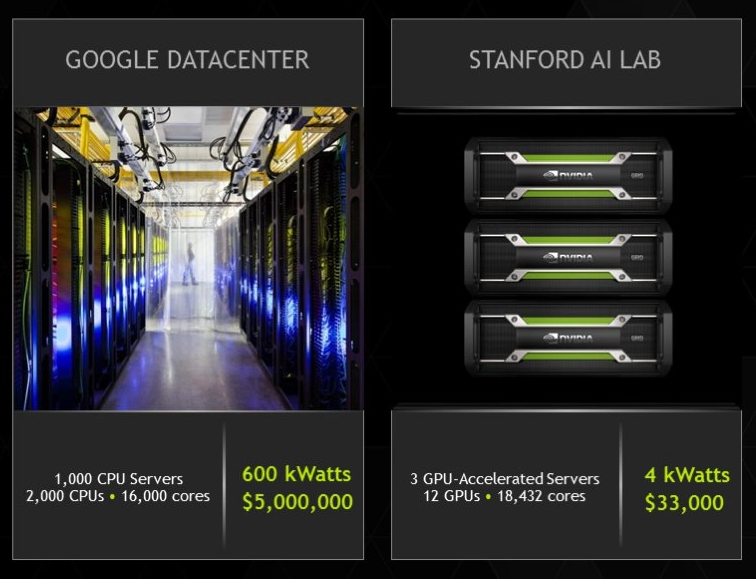

GPU accelerated computing versus cluster computing for machine / deep learning

Microsoft Research in 2013 released this article that nobody got fired for buying a cluster. At that time, optimizations on CPU were already a very interesting point in computation.

Nowadays, it’s even more the case with GPU :

Benchmarks with BIDMach library show that main classification algorithms run on a single instance with a GPU are faster than on a cluster of hundred CPU instances with distributed technologies such as SPARK.

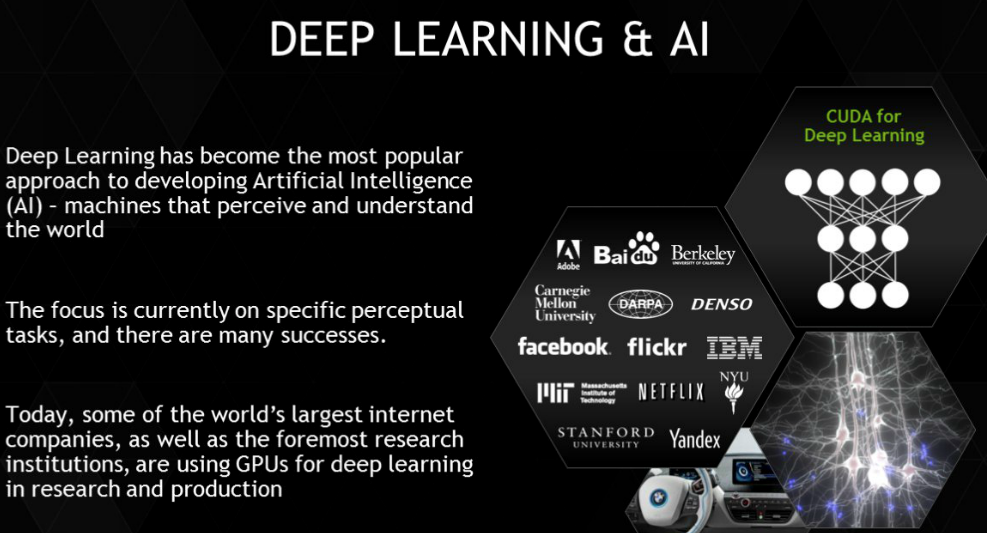

The new approach of deep learning:

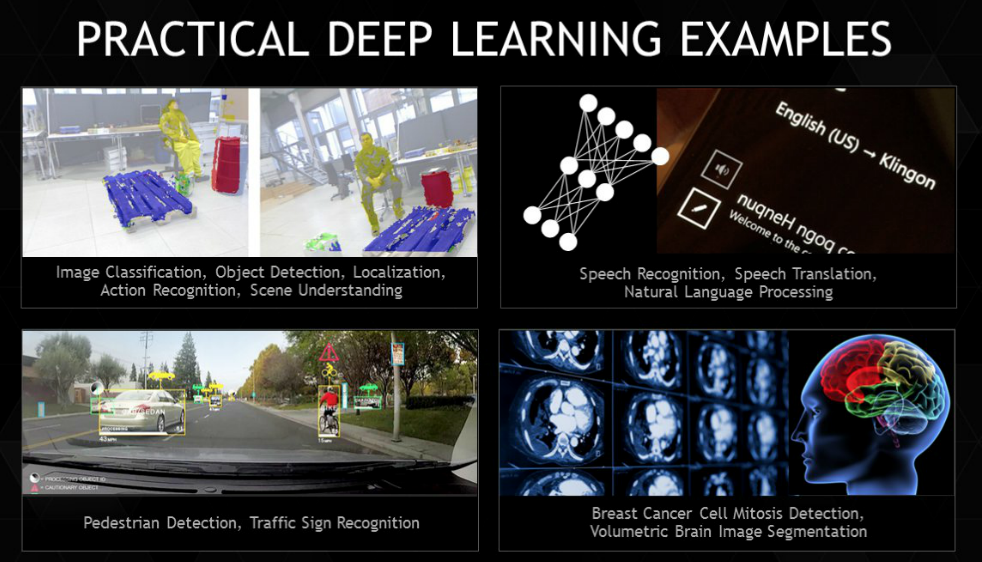

Practical examples from NVIDIA :

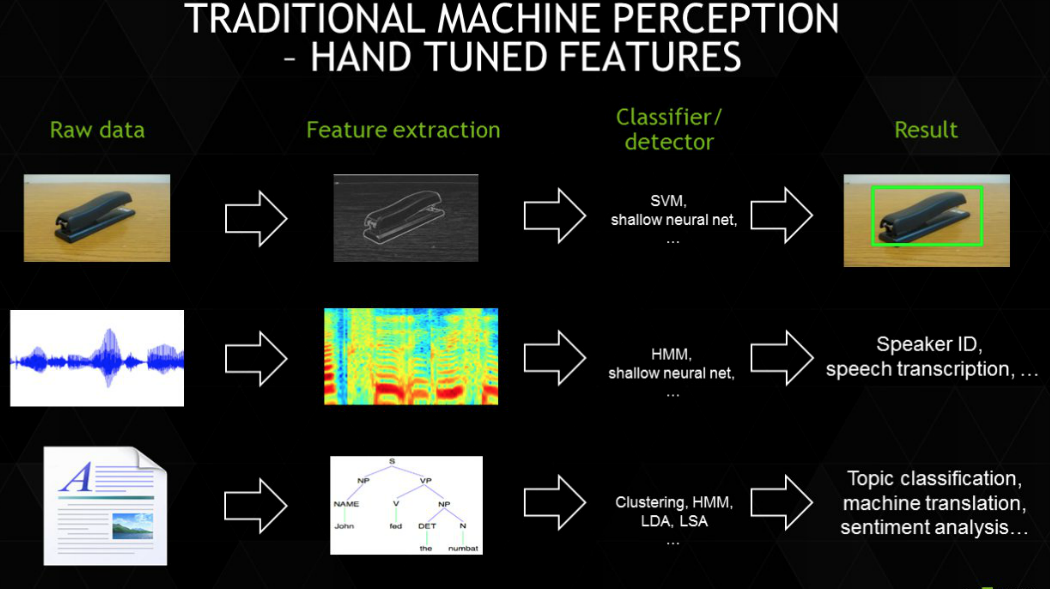

The traditional approach of feature engineering :

where the main problem was to find the correct definition of features.

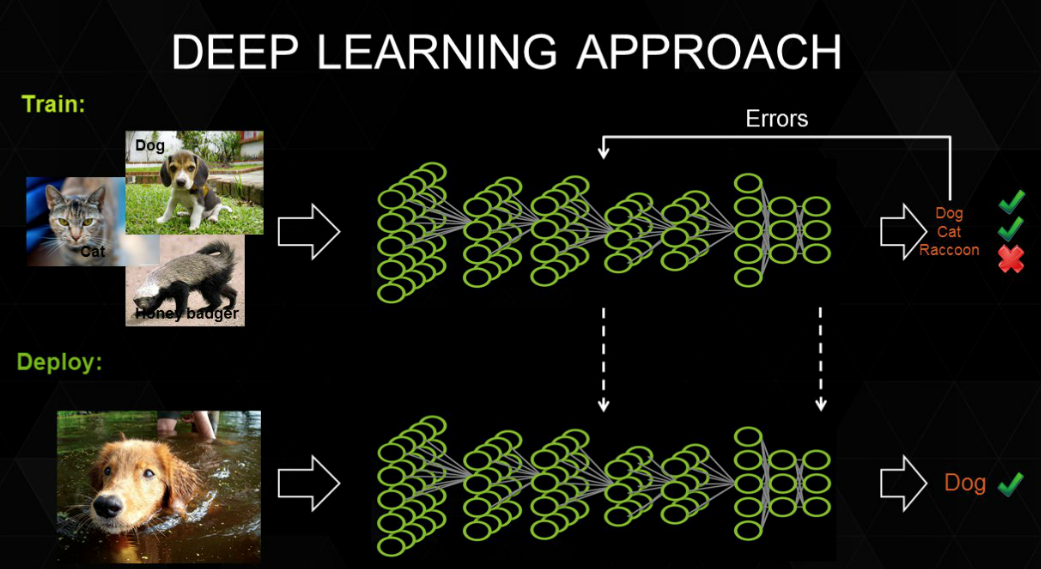

And the new deep learning approach :

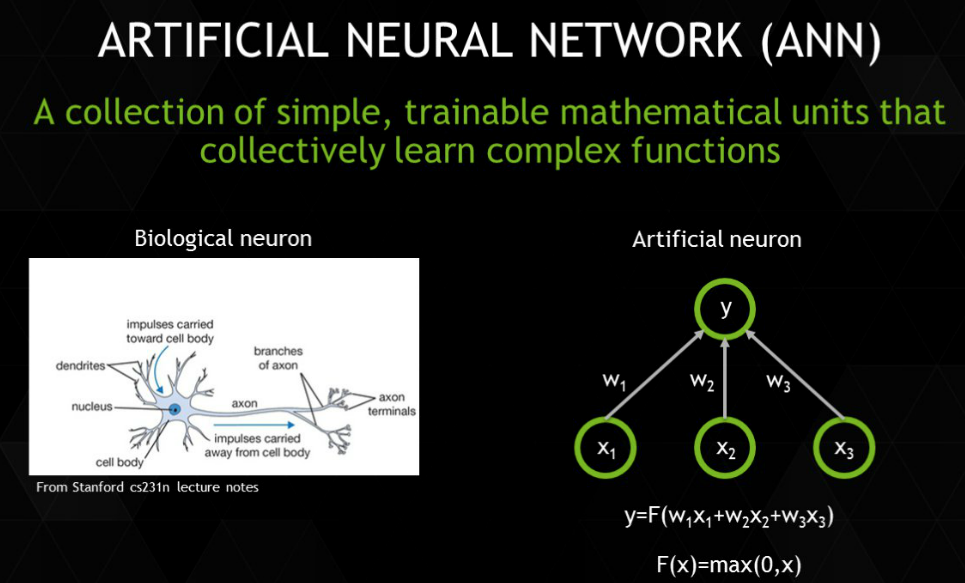

is inspired by nature :

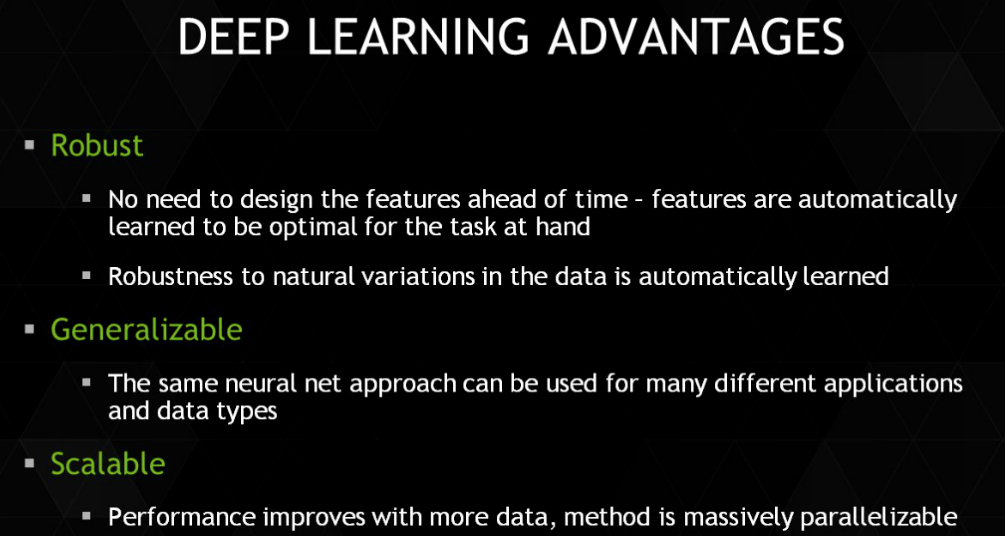

with the following advantages :

Here are my installs of Berkeley’s deep learning library Caffe and NVIDIA deep learning interactive interface DIGITS on NVIDIA GPU :

-

on iMac

-

on AWS g2 instances with my ready-to-deploy AWS OpWorks Chef recipe.

Installs on mobile phones :

Clusters remain very interesting for parsing and manipulating large files such as for example parsing Wikipedia pages with Spark.